The Swiss Cheese Model: Why disasters happen the way they do.

Let’s talk about Cheese, Swiss cheese to be precise.

In the high-stakes world of IT, think aviation, healthcare, nuclear power, or major IT infrastructure providers — disasters don’t just crash-land from nowhere like a rogue meteor.

Nope, they’re more like a cosmic joke where a bunch of tiny screw-ups and lurking issues all line up perfectly, just like those lovely holes in Swiss cheese.

British psychologist James Reason nailed this with his Swiss Cheese Model, which basically says: multiple layers of defense exist… each with their own flaws (holes), and when those holes decide to party together, boom — disaster served on a platter.

The Swiss Cheese Model: Why “Cheese” is a correct metaphor.#

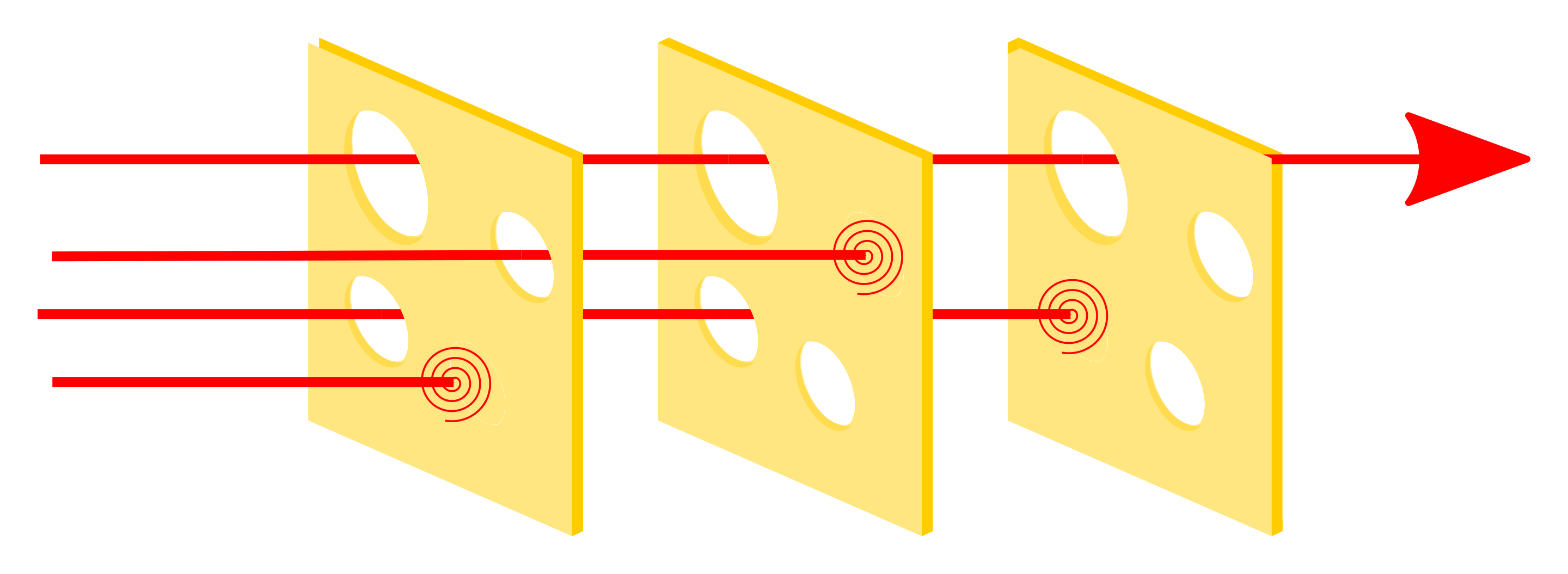

Picture this: your system’s defenses are slices of Swiss cheese. Each slice is a so-called “layer of protection” — it could be tech, processes, or human eyeballs supposedly watching over things.

Picture this: your system’s defenses are slices of Swiss cheese. Each slice is a so-called “layer of protection” — it could be tech, processes, or human eyeballs supposedly watching over things.

And those holes? Yeah, those are the cracks where stuff slips through. Accident happens when all those holes align like a perfect Swiss cheese constellation, letting the big bad hazard slip through every single layer like it owns the place.

Active vs. Latent Failures — The Dynamic Duo of Disaster#

Here’s where it gets juicy. The model splits failures into two flavors:

-

Active Failures: These are the immediate, in-your-face screw-ups by people on the front line. A pilot pressing the wrong button, a dev pushing buggy code—classic “Oops, I did it again.”

-

Latent Conditions: These are the silent, lurking evils— bad architecture or design choises, half-assed training, crappy procedures—that sit quietly until they jump out and bite you in the ass.

Put these two together, and you’ve got a recipe for a full-blown system meltdown.

Root Cause Analysis (RCA): Digging Through the Cheese#

So what do you do when your system turns into Swiss cheese? You get out the magnifying glass and do some good old-fashioned Root Cause Analysis (RCA). That means peeling back the layers, not blaming the guy who pressed the wrong button, but tracing the domino effect of failures—active and latent—that aligned to cause the mess. You find the systemic weaknesses, and ideally, fix the holes instead of just patching the leaks temporarily.

How to Stop Your System from Being Swiss Cheese#

Alright, how do you stop your mission-critical system from being a ticking Swiss cheese bomb?

-

Defense in Depth: Don’t just rely on one layer. Stack those defenses like you’re building a fortress, so even if one slice has holes, others cover them. Each layer of defense might possibly block the attacker, or block the failure from happening.

-

Patch It Like It’s Hot: Keep software and hardware up to date, because hackers love those unpatched holes like they’re a buffet.

-

Continuous Monitoring: Have systems watching the watchers—intrusion detection and response so you catch weird stuff before it explodes.

-

Train Your People: Because your “human firewall” can be full of holes if you don’t educate the team on the latest tricks and scams.

-

Culture Over Blame: Make it safe for people to report near-misses or problems without fearing the boss’s wrath. After all, hidden holes get bigger if you ignore them.

Final Thoughts — It’s All About Layers#

So here’s the kicker: accidents in complex, mission-critical systems aren’t just about one idiot button press or one faulty widget. They’re about layers and layers of screw-ups lining up like a Swiss cheese conspiracy.

The Swiss Cheese Model reminds us that the solution isn’t witch hunts or finger-pointing but system-wide thinking and relentless patching and preventing of those pesky holes.

So next time your system fails, remember: it’s not just you—it’s the entire darn cheese platter.